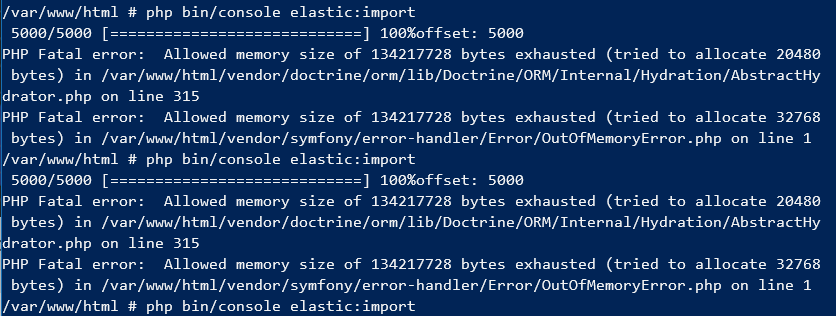

How to deal with large Doctrine datasets - Symfony console command - OutOfMemoryError - PHP Fatal error: Allowed memory size of * bytes exhausted

Imagine that you want to import your 5000+ items from database to ElasticSearch and you have restricted memory limits (e.g. 128MB). You will probably end up with PHP Fatal error: Allowed memory size of 134217728 bytes exhausted.

What you have to do?

You have to unconfigure logger, clear entity manager, each time, when you move your offset and unset $data in while/foreach.

Example here

<?php

namespace App\Command;

class ElasticImportCommand extends Command

{

protected static $defaultName = 'elastic:import';

...

protected function execute(InputInterface $input, OutputInterface $output): int

{

$io = new SymfonyStyle($input, $output);

...

//unset logger

$this->entityManager->getConnection()->getConfiguration()->setSQLLogger(null);

$offset = 0;

$limit = 5000;

/** @var Query $query */

while ($data = $repository->getRecipesToIndex($offset, $limit)) {

$progressBar = new ProgressBar($io, count($data));

$progressBar->start();

foreach ($data as $recipe) {

$indexer->scheduleIndex('recipe', new Document((string)$recipe->getId(), $this->createRecipeDto($recipe)));

$progressBar->advance();

}

//unset data

\unset($data);

$offset += $limit;

$io->writeln('offset: ' . $offset);

$progressBar->finish();

//flush your indexer interface

$indexer->flush();

//clear entity manager - this is important

$this->entityManager->clear();

}

return Command::SUCCESS;

}

}